kottke.org posts about Alexis Madrigal

On Friday, November 13, 170,792 new cases of Covid-19 were reported in the United States. About 3000 of those people will die from their disease on Dec 6 — one day of Covid deaths equal to the number of people who died on 9/11. It’s already baked in, it’s already happened. Here’s how we know.

The case fatality rate (or ratio) for a disease is the number of confirmed deaths divided by the number of confirmed cases. For Covid-19 in the United States, the overall case fatality rate (CFR) is 2.3%. That is, since the beginning of the pandemic, 2.3% of those who have tested positive for Covid-19 in the US have died. In India, it’s 1.5%, Germany is at 1.6%, Iran 5.5%, and in Mexico it’s a terrifying 9.8%.

A recent analysis by infectious disease researcher Trevor Bedford tells us two things related to the CFR.

1. Reported deaths from Covid-19 lag behind reported cases by 22 days. Some deaths are reported sooner and some later, but in general it’s a 22-day lag.1

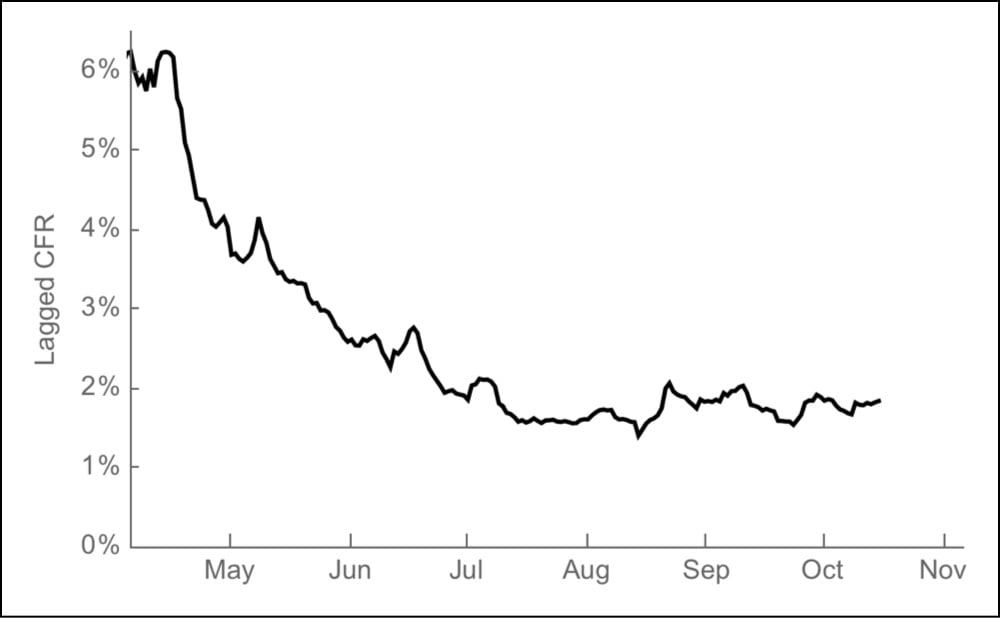

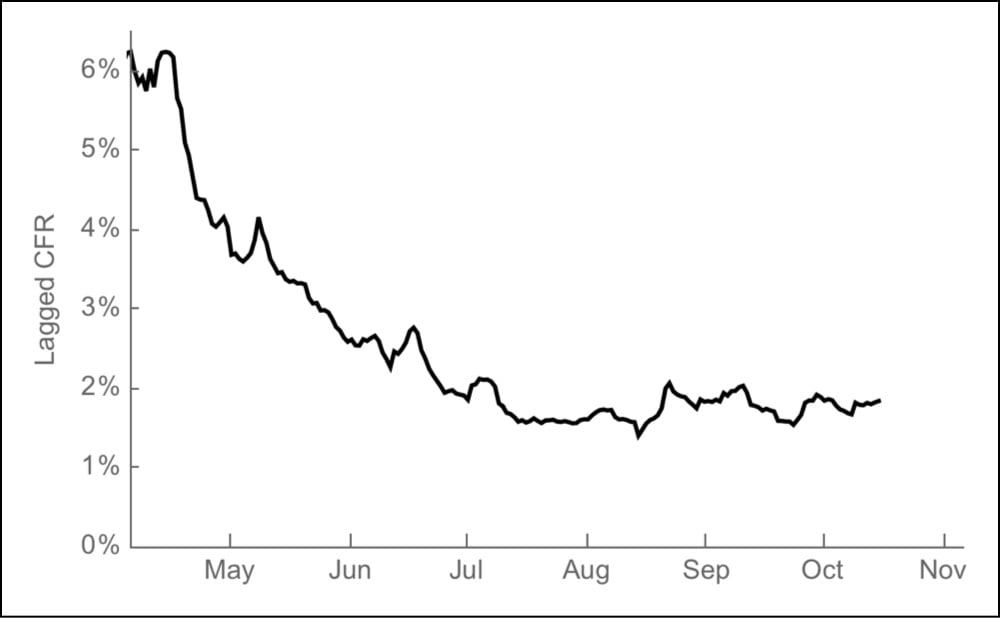

2. The overall CFR in the US is 2.3% but if you use the 22-day lag to calculate what Bedford calls “a lag-adjusted case fatality rate”, it’s a pretty steady average of 1.8% since August. Here’s a graph:

As you can see, in the early days of the pandemic, 4-6% of the cases ended in death and now that’s down to ~1.8%. That’s good news! The less good news is that the current case rate is high and rising quickly. Because of the lag in reported deaths, the rise in cases might not seem that alarming to some, even though those deaths will eventually happen. What Bedford’s analysis provides is a quick way to estimate the number of deaths that will occur in the future based on the number of cases today: just multiply the number of a day’s cases by 1.8% and you get an estimated number of people who will die 22 days later.2

For instance, as I said above, 170,792 cases were reported on Nov 13 — 1.8% is 3074 deaths to be reported on December 6. Cases have been over 100,000 per day for 11 days now: here are the estimated deaths from that time period:

| Date |

Cases |

Est. deaths (on date) |

| 2020-11-15 | 145,670 | 2622 (2020-12-08) |

| 2020-11-14 | 163,473 | 2943 (2020-12-07) |

| 2020-11-13 | 170,792 | 3074 (2020-12-06) |

| 2020-11-12 | 150,526 | 2709 (2020-12-05) |

| 2020-11-11 | 144,499 | 2601 (2020-12-04) |

| 2020-11-10 | 130,989 | 2358 (2020-12-03) |

| 2020-11-09 | 118,708 | 2137 (2020-12-02) |

| 2020-11-08 | 110,838 | 1995 (2020-12-01) |

| 2020-11-07 | 129,191 | 2325 (2020-11-30) |

| 2020-11-06 | 125,252 | 2255 (2020-11-29) |

| 2020-11-05 | 116,153 | 2091 (2020-11-28) |

| 2020-11-04 | 103,067 | 1855 (2020-11-27) |

| Totals | 1,609,158 | 28,965 |

Starting the day after Thanksgiving, a day traditionally called Black Friday, the 1.6 million positive cases reported in the past 12 days will result in 2-3000 deaths per day from then into the first week of December. Statistically speaking, these deaths have already occurred — as Bedford says, they are “baked in”. Assuming the lagged CFR stays at ~1.8% (it could increase due to an overtaxed medical system) and if the number of cases keeps rising, the daily death toll would get even worse. As daily case totals are reported, you can just do the math yourself:

number of cases × 0.018

200,000 cases in a day would be ~3600 deaths. 300,000 daily cases, a number that would have been inconceivable to imagine in May but is now within the realm of possibility, would result in 5400 deaths in a single day. Vaccines are coming, there is hope on the horizon. But make no mistake: this is an absolute unmitigated catastrophe for the United States.

Update: Over at The Atlantic, Alexis Madrigal and Whet Moser took a closer look at Bedford’s model, aided by Ryan Tibshirani’s analysis.

Tibshirani’s first finding was that the lag time between states was quite variable-and that the median lag time was 16 days, a lot shorter than the mean. Looking state by state, Tibshirani concluded, it seemed difficult to land on an exact number of days as the “right” lag “with any amount of confidence,” he told us. Because cases are rising quickly, a shorter lag time would mean a larger denominator of cases for recent days — and a lower current case-fatality rate, something like 1.4 percent. This could mean fewer overall people are dying.

But this approach does not change the most important prediction. The country will still cross the threshold of 2,000 deaths a day, and even more quickly than Bedford originally predicted. Cases were significantly higher 16 days ago than 22 days ago, so a shorter lag time means that those higher case numbers show up in the deaths data sooner. Even with a lower case-fatality rate, deaths climb quickly. Estimating this way, the country would hit an average of 2,000 deaths a day on November 30.

The other major finding in Tibshirani’s analysis is that the individual assumptions and parameters in a Bedford-style model don’t matter too much. You can swap in different CFRs and lag-time parameters, and the outputs are more consistent than you might expect. They are all bad news. And, looking retrospectively, Tibshirani found that a reasonable, Bedford-style lagged-CFR model would have generated more accurate national-death-count predictions than the CDC’s ensemble model since July.

Not all hour-long podcasts are worthwhile, but I found this one by The Atlantic’s Matt Thompson and Alexis Madrigal to be pretty compelling. The subject: how to fix social media, or rather, how to create a variation on social media that allows you to properly pose the question as to whether or not it can be fixed.

For both Matt and Alexis, social media (and in particular, Twitter) is not especially usable or desirable in the form in which it presents itself. Both Matt and Alexis have shaped and truncated their Twitter experience. In Alexis’s case, this means going read-only, not posting tweets any more, and just using Twitter as an algorithmic feed reader by way of Nuzzel, catching the links his friends are discussing, and in some cases, the tweets they’re posting about those links. Matt is doing something slightly different: calling on his friends not to like to retweet his ordinary Twitter posts, but to reply to his tweets in an attempt to start a conversation.

Both Matt and Alexis are, in their own way, trying to inject something of the old spirit of the blogosphere into their social media use. In Alexis’s case, it’s the socially mediated newsreader function. In Matt’s, it’s the comment thread, the great discussions we used to have on blogs like Snarkmarket.

(Full disclosure: I was a longtime commenter on Matt and Robin Sloan’s blog Snarkmarket from 2003 to 2008, until I was elevated into a full third member of the site, where I posted pretty regularly until about 2013, when our blog, like so many others, began to wind down, replaced by both social media and professional news sites. I was also one of the early contributors to Alexis’s Tech section at The Atlantic starting in 2010, which is also held aloft as a blog standard during this podcast. So I have some skin in this game.)

Also worth reading into this discussion: Anil Dash’s 20th anniversary roundtable at Function with Bruce Ableson, Lisa Phillips, and Andrew Smales, which pretty explicitly (and usefully!) constructs the early blogosphere as the precursor to contemporary social media.

It’s easy to look at Twitter and look at Facebook, and look at the things that are happening, and how awful people are to each other, and say: the world would be better off without the internet. And I don’t believe that. I think that there’s still space where people can be good to each other.

So here’s the thing:

- The blogosphere was not always better than the contemporary social web;

- The blogosphere felt like it was getting better in a way that the contemporary social web does not.

And that turns out to make a huge difference! I mean, in general, the world was sort of a crummy place in the early 2000s. (The late 1990s were actually good.) But on the web side, especially, things in the early 2000s felt like they were getting better. Services were improving, more information was coming online, storage and computing power (both locally and in the cloud) were improving in a way that felt tangible, people were getting more connected, those connections felt more powerful and meaningful. It was the heroic phase of the web, even as it was also the time that decisions were being made that were going to foreclose on a lot of those heroic possibilities.

A lot of the efforts to reshape social media, or to walk away from it in favor of RSS feeds or something else, are really attempts to recapture those utopian elements that were active in the zeitgeist ten, fifteen, and twenty years ago. They still exercise a powerful hold over our collective imagination about what the internet is, and could be, even when they take the form of dashed hopes and stifled dreams.

I feel like I can speak to this quite personally. Ten years ago, I was just another graduate student in a humanities program stuck with a shitty job market, layered atop what were already difficult career prospects to begin with. The only thing I had going for me that the average literary modernist didn’t was that I was writing for a popular blog with two very talented young journalists who liked to think about the future of media. That pulled me in a definite direction in terms of the kinds of things I wrote about (yes, Walter Benjamin, but also Google Books), and the places where I ended up writing them (Kottke.org, The Atlantic, and eventually Wired). So instead of being an unemployable humanist, I became an underemployed journalist.

At the same time, the blogosphere, while crucial, has only offered so much velocity and so much gravity. By which I mean: it’s only propelled my career so far, and the blogs I’ve written for (Kottke notwithstanding) have only had so much ability to retain me before they’ve changed their business model, changed management, gone out of business, or been quietly abandoned. They’re little asteroids, not planets. Most of the proper publications I’ve written for, even the net-native ones, have been dense enough to hold an atmosphere.

And guess what? So have Twitter and Facebook. Just by enduring, those places have become places for lasting connections and friendships and career opportunities, in a way the blogosphere never was, at least for me. (Maybe this is partly a function of timing, but look: I was there.) And this means that, despite their toxicity, despite their shortcomings, despite all the promises that have gone unfulfilled, Twitter and Facebook have continued to matter in a way that blogs don’t.

For good or for ill, Twitter lets you take the roof off and contact people you’d otherwise never reach. The question, I think, is whether you have to tack that roof back on again in order to get the valuable newsgathering and conversation elements that people once found so compelling about the blogosphere, or whether there’s some other form of modification that can be made to build in proper protections.

The other question is whether there can be anything like a one-size-fits-all solution to the problem of social media. I suspect there isn’t, just because people are at different points on their career trajectories, which shapes their needs and wants vis-a-vis social media accordingly. Some of us are still trying to blow up, or (in some cases) remind the world what they liked about us to begin with. Others of us are just trying to do our jobs and get through the day. Many more still have little capital to trade on to begin with, and are just looking for some kind of meaningful interaction to give us a reason why we logged in in the first place. The fact that this is the largest group, for whom the tools are the least well-suited, and who were promised the most by social media’s ascendancy, is the great tragedy of the form.

Maybe we need to ask ourselves, what was it that we wanted from the blogosphere in the first place? Was it a career? Was it just a place to write and be read by somebody, anybody? Was it a community? Maybe it began as one thing and turned into another. That’s OK! But I don’t think we can treat the blogosphere as a settled thing, when it was in fact never settled at all. Just as social media remains unsettled. Its fate has not been written yet. We’re the ones who’ll have to write it.

Alexis Madrigal is great at the systemic sublime — taking an everyday object or experience and showing how it implicates interconnected networks across space, time, and levels of analysis. His history of the drinking straw — or rather, “a history of modern capitalism from the perspective of the drinking straw” — is no exception. It doesn’t give quite enough space to disability, either in its history or its examination of the straw’s future — the stakes of that debate are better-covered in this David Perry essay from a year ago — but there are still plenty of goodies.

Temperance and public health grew up together in the disease-ridden cities of America, where despite the modern conveniences and excitements, mortality rates were higher than in the countryside. Straws became a key part of maintaining good hygiene and public health. They became, specifically, part of the answer to the scourge of unclean drinking glasses. Cities begin requiring the use of straws in the late 1890s. A Wisconsin paper noted in 1896 that already in many cities “ordinances have been issued making the use of wrapped drinking straws essential in public eating places.”

Add in urbanization, the suddenly cheap cost of wood-pulp paper goods, and voila: you get soda fountains and disposable straws, soon followed by disposable paper cups, and eventually, their plastic successors, manufactured by a handful of giant companies to the specifications of a handful of other giant companies, in increasingly automated processes for the benefit of shareholders. It’s a heck of a yarn.

Alexis Madrigal is back at The Atlantic, where he’ll be writing about technology, science, and business. His first piece is a reflection on how the Internet has changed in the 10 years he’s been writing about it. In 2007, the Web was triumphant. But then came apps and Facebook and other semi-walled gardens:

O’Reilly’s lengthy description of the principles of Web 2.0 has become more fascinating through time. It seems to be describing a slightly parallel universe. “Hyperlinking is the foundation of the web,” O’Reilly wrote. “As users add new content, and new sites, it is bound into the structure of the web by other users discovering the content and linking to it. Much as synapses form in the brain, with associations becoming stronger through repetition or intensity, the web of connections grows organically as an output of the collective activity of all web users.”

Nowadays, (hyper)linking is an afterthought because most of the action occurs within platforms like Facebook, Twitter, Instagram, Snapchat, and messaging apps, which all have carved space out of the open web.

That strategy has made the top tech companies insanely valuable:

In mid-May of 2007, these five companies were worth $577 billion. Now, they represent $2.9 trillion worth of market value! Not so far off from the combined market cap ($2.85T) of the top 10 largest companies in the second quarter of 2007: Exxon Mobil, GE, Microsoft, Royal Dutch Shell, AT&T, Citigroup, Gazprom, BP, Toyota, and Bank of America.

In 2007, I wrote a piece (and a follow-up) about how Facebook was the new AOL and how their walled garden strategy was doomed to fail in the face of the open Web. The final paragraph of that initial post is a good example of the Web triumphalism described by Madrigal but hasn’t aged well:

As it happens, we already have a platform on which anyone can communicate and collaborate with anyone else, individuals and companies can develop applications which can interoperate with one another through open and freely available tools, protocols, and interfaces. It’s called the internet and it’s more compelling than AOL was in 1994 and Facebook in 2007. Eventually, someone will come along and turn Facebook inside-out, so that instead of custom applications running on a platform in a walled garden, applications run on the internet, out in the open, and people can tie their social network into it if they want, with privacy controls, access levels, and alter-egos galore.

The thing is, Facebook did open up…they turned themselves inside-out and crushed the small pieces loosely joined contingent. They let the Web flood in but caught the Web’s users and content creators before they could wash back out again. The final paragraph of the follow-up piece fared much better in hindsight:

At some point in the future, Facebook may well open up, rendering much of this criticism irrelevant. Their privacy controls are legendarily flexible and precise…it should be easy for them to let people expose parts of the information to anyone if they wanted to. And as Matt Webb pointed out to me in an email, there’s the possibility that Facebook turn itself inside out and be the social network bit for everyone else’s web apps. In the meantime, maybe we shouldn’t be so excited about the web’s future moving onto an intranet.

What no one saw back then, about a week after the release of the original iPhone, was how apps on smartphones would change everything. In a non-mobile world, these companies and services would still be formidable but if we were all still using laptops and desktops to access information instead of phones and tablets, I bet the open Web would have stood a better chance.

Alexis Madrigal wonders: when did the idea of the dinner reservation come about?

Reserving a table is not so much an “industrial age bolt-on” as it’s a slippage from the older custom of reserving a ROOM in a restaurant. As my book explains, 18th-cy “caterers” [traiteurs] either served clients in their homes or in rooms at the traiteur’s, the first self-styled restaurateurs borrowed from cafes in having lots of small tables in one big room. Throughout the nineteenth century, many big city restaurants continued to have both a (very) large public eating room with numerous, small (private) tables AND a number of smaller rooms that could be reserved for more private meals. (Much as some restaurants have special “banquet facilities” or “special occasion” rooms today.)

See also: the first NY Times restaurant review circa 1859.

Rob Walker asked some tech writers what their most outdated gadget was. Alexis Madrigal pretty much answers for me:

I think it’s the sound system in our car 2003 Volkswagen Golf TDI,” Madrigal says. “We have one of those magical devices that lets you play an iPod through the tape deck (how do those work?) — but it makes a horrible screeching noise when it gets hot.” That leaves the CD player and terrestrial radio: “We seem to rotate between the same three CDs we burned or borrowed some time ago, and the local NPR affiliate.”

Madrigal hastens to add that what he really wants is a stereo with “an aux-in so that I can play Rdio throughout the vehicle.” The problem? “I am scared of car audio guys,” he says. “I knew a lot of them in high school. They are a kind of gadgethead that just kind of freaks me out. I loathe the idea of going in there and having to explain why we have this old-ass tape deck, and then — because I don’t know any better — getting ripped off on a new stereo.

It’s either that or our cable box/DVR…that thing records about 20 minutes of HD programming and is 20 years old now. Really should trade it in for something made since Clinton left office. See also Robin Sloan’s dumbphone.

One of my favorite magazine pieces is Truman Capote’s long profile of Marlon Brando from the Nov 9, 1957 issue of the New Yorker.

He hung up, and said, “Nice guy. He wants to be a director-eventually. I was saying something, though. We were talking about friends. Do you know how I make a friend?” He leaned a little toward me, as though he had an amusing secret to impart. “I go about it very gently. I circle around and around. I circle. Then, gradually, I come nearer. Then I reach out and touch them — ah, so gently…” His fingers stretched forward like insect feelers and grazed my arm. “Then,” he said, one eye half shut, the other, à la Rasputin, mesmerically wide and shining, “I draw back. Wait awhile. Make them wonder. At just the right moment, I move in again. Touch them. Circle.” Now his hand, broad and blunt-fingered, travelled in a rotating pattern, as though it held a rope with which he was binding an invisible presence. “They don’t know what’s happening. Before they realize it, they’re all entangled, involved. I have them. And suddenly, sometimes, I’m all they have. A lot of them, you see, are people who don’t fit anywhere; they’re not accepted, they’ve been hurt, crippled one way or another. But I want to help them, and they can focus on me; I’m the duke. Sort of the duke of my domain.”

In a piece for Columbia Journalism Review, Douglas McCollam details how Capote got access to the reclusive star when he was filming Sayonara in Japan.

Logan had no intention of subjecting his own cast and crew to the same withering scrutiny. In particular, he was concerned about what might happen if Capote gained access to his mercurial leading man. Though Brando was notoriously press-shy, and Logan doubted Capote’s ability to crack the star’s enigmatic exterior, he wasn’t taking any chances. He and William Goetz, Sayonara’s producer, had both written to The New Yorker stating that they would not cooperate for the piece and, furthermore, that if Capote did journey to Japan he would be barred from the set. Nevertheless, Capote had come.

As Logan later recounted, his reaction to Capote’s sudden appearance was visceral. He came up behind Capote, and without saying a word, picked the writer up and transported him across the lobby, depositing him outside the front door of the hotel. “Now come on, Josh!” Capote cried. “I’m not going to write anything bad.”

Logan went immediately upstairs to Brando’s room to deliver a warning: “Don’t let yourself be left alone with Truman. He’s after you.” His warning would go unheeded. Recalling his reaction to Capote, Logan later wrote, “I had a sickening feeling that what little Truman wanted, little Truman would get.”

Alexis Madrigal wrote about Capote’s Brando piece for the first installment of Nieman Storyboard’s Why’s This So Good series about classic pieces of narrative nonfiction.

The Atlantic’s Alexis Madrigal gets an inside look at how Google builds its maps (and what that means for the future of everything). “If Google’s mission is to organize all the world’s information, the most important challenge — far larger than indexing the web — is to take the world’s physical information and make it accessible and useful.”

From Sarah Rich and Alexis Madrigal, a story on a company that might be “the Pixar of the iPad age”, Moonbot Studios. Moonbot made a wonderfully inventive iPad book called The Fantastic Flying Books of Mr. Morris Lessmore.

Morris Lessmore may be the best iPad book in the world. In July, Morris Lessmore hit the number one spot on Apple’s iPad app chart in the US. That is to say, Morris Lessmore wasn’t just the bestselling book, but the bestselling *app* of any kind for a time. At one point or another, it has been the top book app in 21 countries. A New York Times reviewer called it “the best,” “visually stunning,” and “beautiful.” Wired.com called it “game-changing.” MSNBC said it was “the most stunning iPad app so far.” And The Times UK made this prediction, “It is not inconceivable that, at some point in the future, a short children’s story called ‘The Fantastic Flying Books of Mr. Morris Lessmore’ will be regarded as one of the most influential titles of the early 21st century.”

At The Atlantic, Alexis Madrigal has an interesting post about Apple as a religion and uses that lens to look at the so-called Antennagate** brouhaha. For example, Apple was built on four key myths:

1. a creation myth highlighting the counter-cultural origin and emergence of the Apple Mac as a transformative moment;

2. a hero myth presenting the Mac and its founder Jobs as saving its users from the corporate domination of the PC world;

3. a satanic myth that presents Bill Gates as the enemy of Mac loyalists;

4. and, finally, a resurrection myth of Jobs returning to save the failing company…

On Twitter, Tim Carmody adds that Apple’s problems are increasingly theological in nature — “Free will, problem of evil, Satanic rebellion” — which is a really interesting way to look at the whole thing. (John Gruber the Baptist?)

** The Antennagate being, of course, the hotel where Apple Inc. is headquartered.

Stay Connected