A History of Regular Expressions and Artificial Intelligence

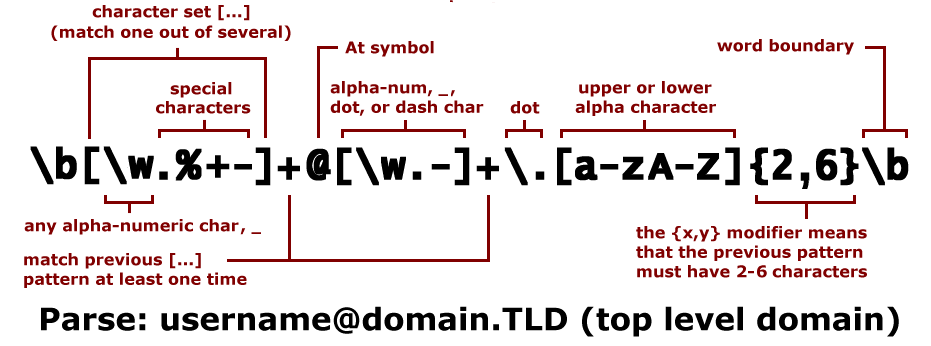

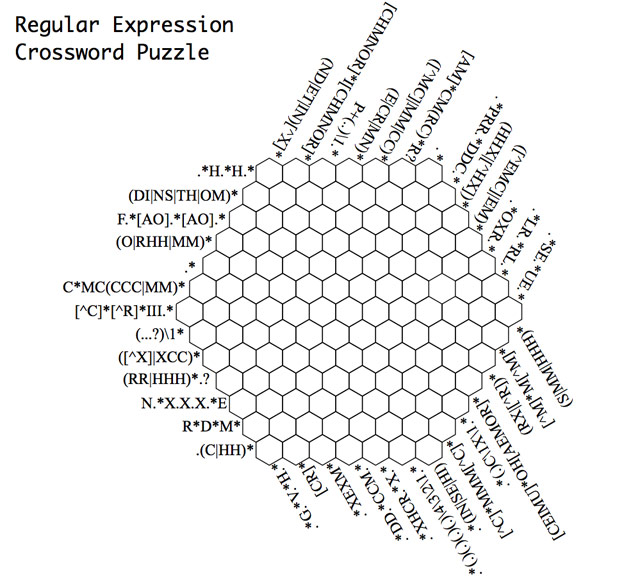

I have an unusually good memory, especially for symbols, words, and text, but since I don’t use regular expressions (ahem) regularly, they’re one of those parts of computer programming and HTML/EPUB editing that I find myself relearning over and over each time I need it. How did something this arcane but powerful even get started? Naturally, its creators were trying to discover (or model) artificial intelligence.

That’s the crux of this short history of “regex” by Buzz Andersen over at “Why is this interesting?”

The term itself originated with mathematician Stephen Kleene. In 1943, neuroscientist Warren McCulloch and logician Walter Pitts had just described the first mathematical model of an artificial neuron, and Kleene, who specialized in theories of computation, wanted to investigate what networks of these artificial neurons could, well, theoretically compute.

In a 1951 paper for the RAND Corporation, Kleene reasoned about the types of patterns neural networks were able to detect by applying them to very simple toy languages—so-called “regular languages.” For example: given a language whose “grammar” allows only the letters “A” and “B”, is there a neural network that can detect whether an arbitrary string of letters is valid within the “A/B” grammar or not? Kleene developed an algebraic notation for encapsulating these “regular grammars” (for example, a*b* in the case of our “A/B” language), and the regular expression was born.

Kleene’s work was later expanded upon by such luminaries as linguist Noam Chomsky and AI researcher Marvin Minsky, who formally established the relationship between regular expressions, neural networks, and a class of theoretical computing abstraction called “finite state machines.”

This whole line of inquiry soon falls apart, for reasons both structural and interpersonal: Pitts, McCullough, and Jerome Lettvin (another early AI researcher) have a big falling out with Norbert Wiener (of cybernetics fame), Minsky writes a book (Perceptrons) that throws cold water on the whole simple neural network as model of the human mind thing, and Pitts drinks himself to death. Minsky later gets mixed up with Jeffrey Epstein’s philanthropy/sex trafficking ring. The world of early theoretical AI is just weird.

But! Ken Thompson, one of the creators of UNIX at Bell Labs comes along and starts using regexes for text editor searches in 1968. And renewed takes on neural networks come along in the 21st century that give some of that older research new life for machine learning and other algorithms. So, until Skynet/global warming kills us all, it all kind of works out? At least, intellectually speaking.

(Via Jim Ray)

Stay Connected