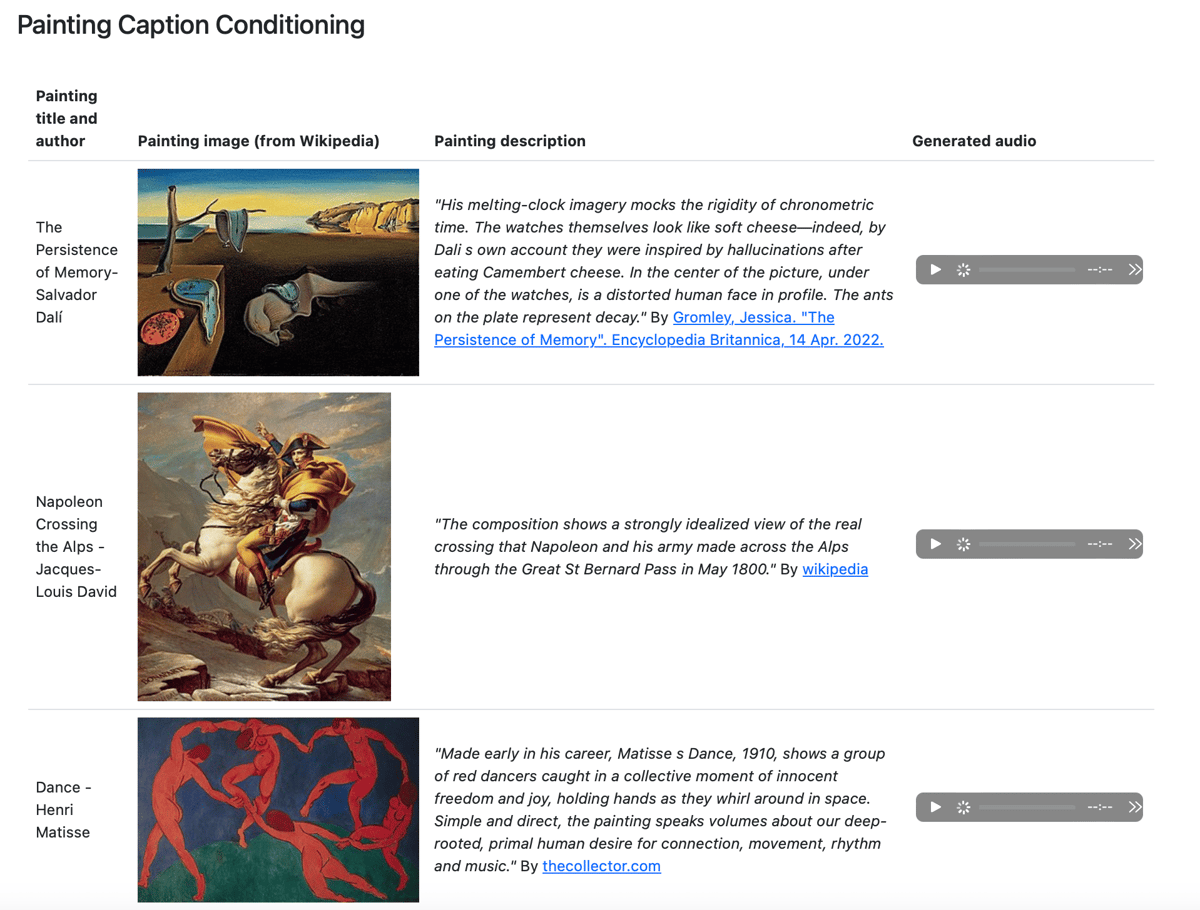

Google’s MusicLM Generates Music from Text

Google Research has released a new generative AI tool called MusicLM. MusicLM can generate new musical compositions from text prompts, either describing the music to be played (e.g., “The main soundtrack of an arcade game. It is fast-paced and upbeat, with a catchy electric guitar riff. The music is repetitive and easy to remember, but with unexpected sounds, like cymbal crashes or drum rolls”) or more emotional and evocative (“Made early in his career, Matisse’s Dance, 1910, shows a group of red dancers caught in a collective moment of innocent freedom and joy, holding hands as they whirl around in space. Simple and direct, the painting speaks volumes about our deep-rooted, primal human desire for connection, movement, rhythm and music”).

As the last example suggests, since music can be generated from just about any text, anything that can be translated/captioned/captured in text, from poetry to paintings, can be turned into music.

It may seem strange that so many AI tools are coming to fruition in public all at once, but at Ars Technica, investor Haomiao Huang argues that once the basic AI toolkit reached a certain level of sophistication, a confluence of new products taking advantage of those research breakthroughs was inevitable:

To sum up, the breakthrough with generative image models is a combination of two AI advances. First, there’s deep learning’s ability to learn a “language” for representing images via latent representations. Second, models can use the “translation” ability of transformers via a foundation model to shift between the world of text and the world of images (via that latent representation).

This is a powerful technique that goes far beyond images. As long as there’s a way to represent something with a structure that looks a bit like a language, together with the data sets to train on, transformers can learn the rules and then translate between languages. Github’s Copilot has learned to translate between English and various programming languages, and Google’s Alphafold can translate between the language of DNA and protein sequences. Other companies and researchers are working on things like training AIs to generate automations to do simple tasks on a computer, like creating a spreadsheet. Each of these are just ordered sequences.

The other thing that’s different about the new wave of AI advances, Huang says, is that they’re not especially dependent on huge computing power at the edge. So AI is rapidly becoming much more ubiquitous than it’s been… even if MusicLM’s sample set of tunes still crashes my web browser.

Stay Connected